StochBullet

An end-to-end RL based quadruped locomotion using PyBullet

Recent advances in employing Deep Reinforcement Learning for quadruped locomotion (RMA, DreamWaQ) have proven to be remarkably robust and promising. This work presents an end-to-end RL framework that enables quadruped locomotion on flat terrains using PyBullet simulation platform. Trained on flat surfaces with fractal noise in simulation, our approach relies on proprioceptive inputs, eliminating the need for cameras or exteroception sensors. The framework exhibits robustness against external pushes and friction changes.

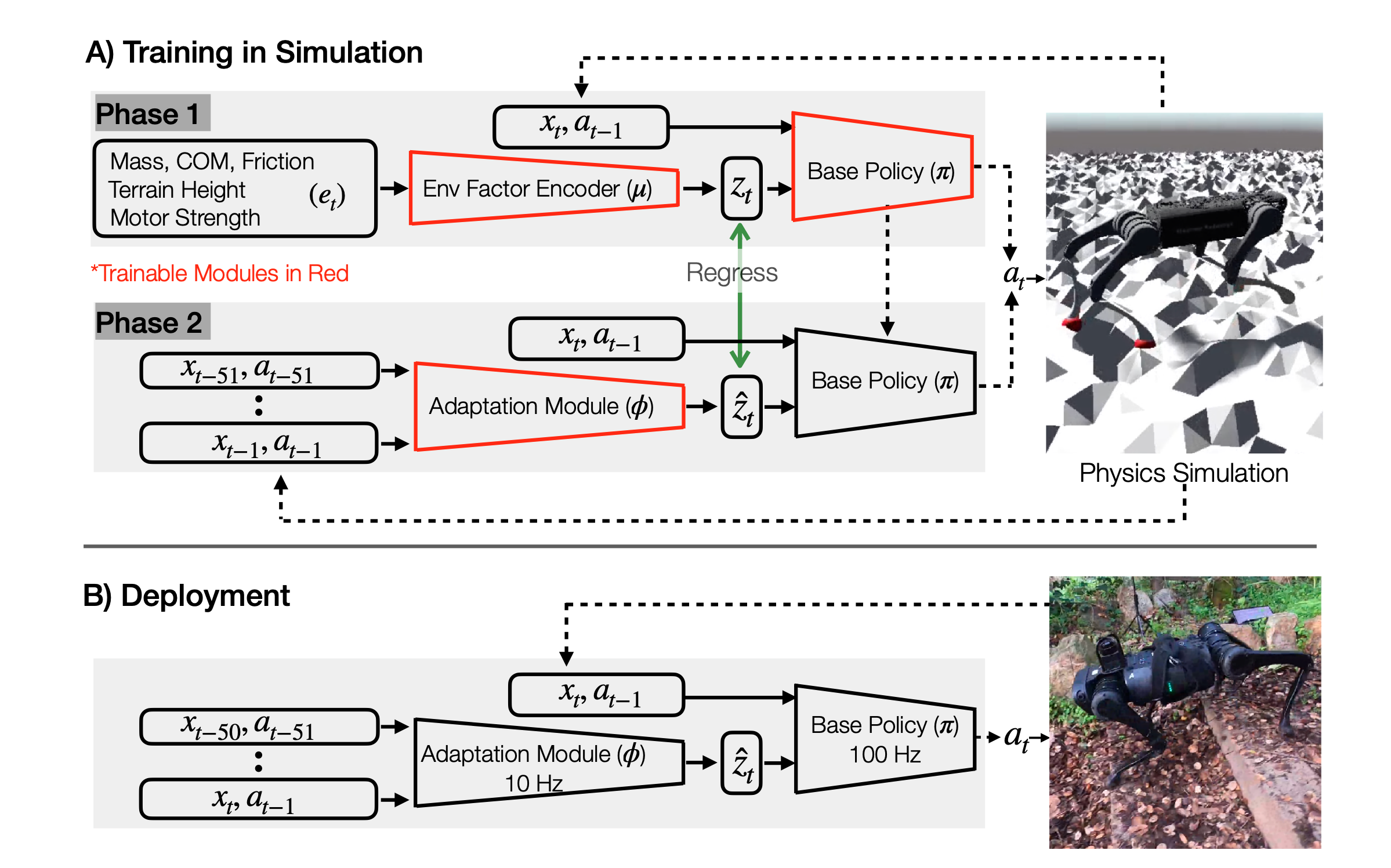

For our training, we employed the Proximal Policy Optimization (PPO) algorithm. Our robot’s movement is confined to the x-direction. We follow the teacher-student training paradigm as adopted in RMA, wherein a teacher policy has access to privileged data such as terrain friction, contact forces, etc, from the simulation engine, apart from receiving the robot’s states. This privileged data is separately encoded by an encoder and serves as a ground-truth to train a student policy via supervised learning. The student policy accepts a history of observations and tries to estimate the encoded privileged information. The output of the policy is 12-dim target joint angles, perturbed relative to the default joint angles of the robot during stand-still position.

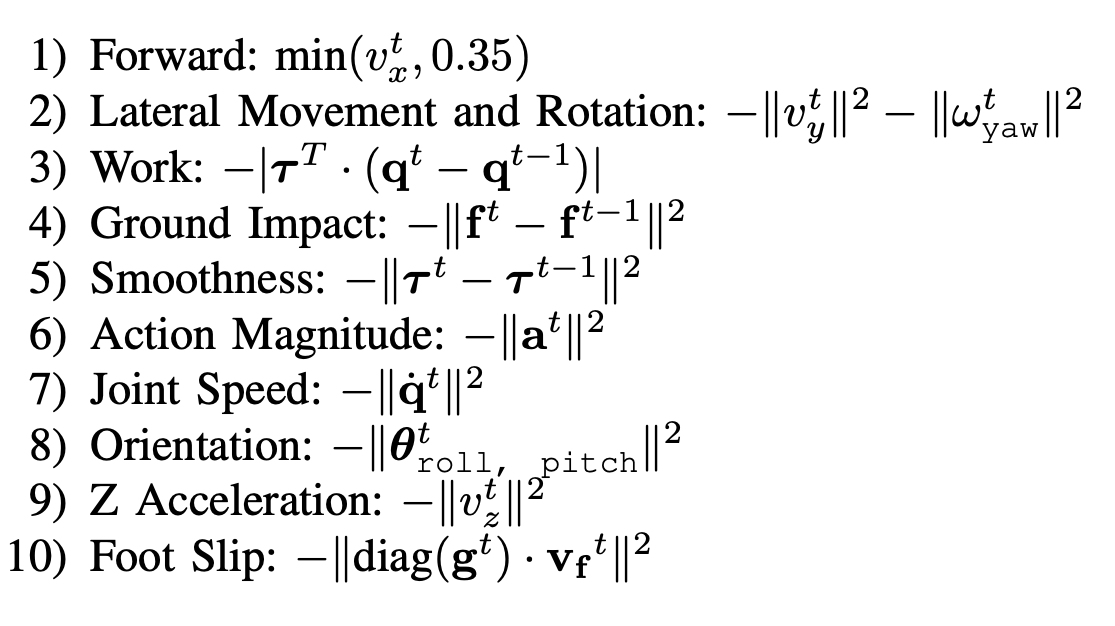

We adopt the reward functions employed by Rapid Motor Adaptation (RMA) for our training, which is formulated as follows:

To guide our learning process, we used a Fixed Curriculum strategy to adjust reward coefficients, manage responses to external forces, and handle friction changes. We follow the strategy adopted in RMA, wherein the negative reward coefficients are initialized with very low values and are gradually increased as the training progresses. This is done because, during the initial stages of training, the robot abandons its task or chooses an early termination when the task reward is overwhelmed by penalties from the auxiliary objectives such as energy minimization term, action rate term, z velocity term, etc.

All our policy evaluations are performed on our custom quadrupedal model, “Stoch3” which is a medium-sized quadruped. The integration of direction tracking capabilities is reserved for our future work.

All code will be made publically available soon at StochLab Github