QuadScape

Diverse quadruped locomotive behaviors on different terrains

This work presents a novel approach to quadruped locomotion control across diverse terrains, integrating reinforcement learning (RL) techniques with proprioceptive observations. While existing literature focuses on enabling quadrupeds to follow various gait patterns or employing trot gaits for challenging landscapes, little attention has been given to controllers capable of demonstrating different gaits across varied terrain types.

Our study introduces an RL-based methodology for controlling quadruped locomotion over a range of terrains, leveraging multiple gaits including trotting, hopping and bounding.

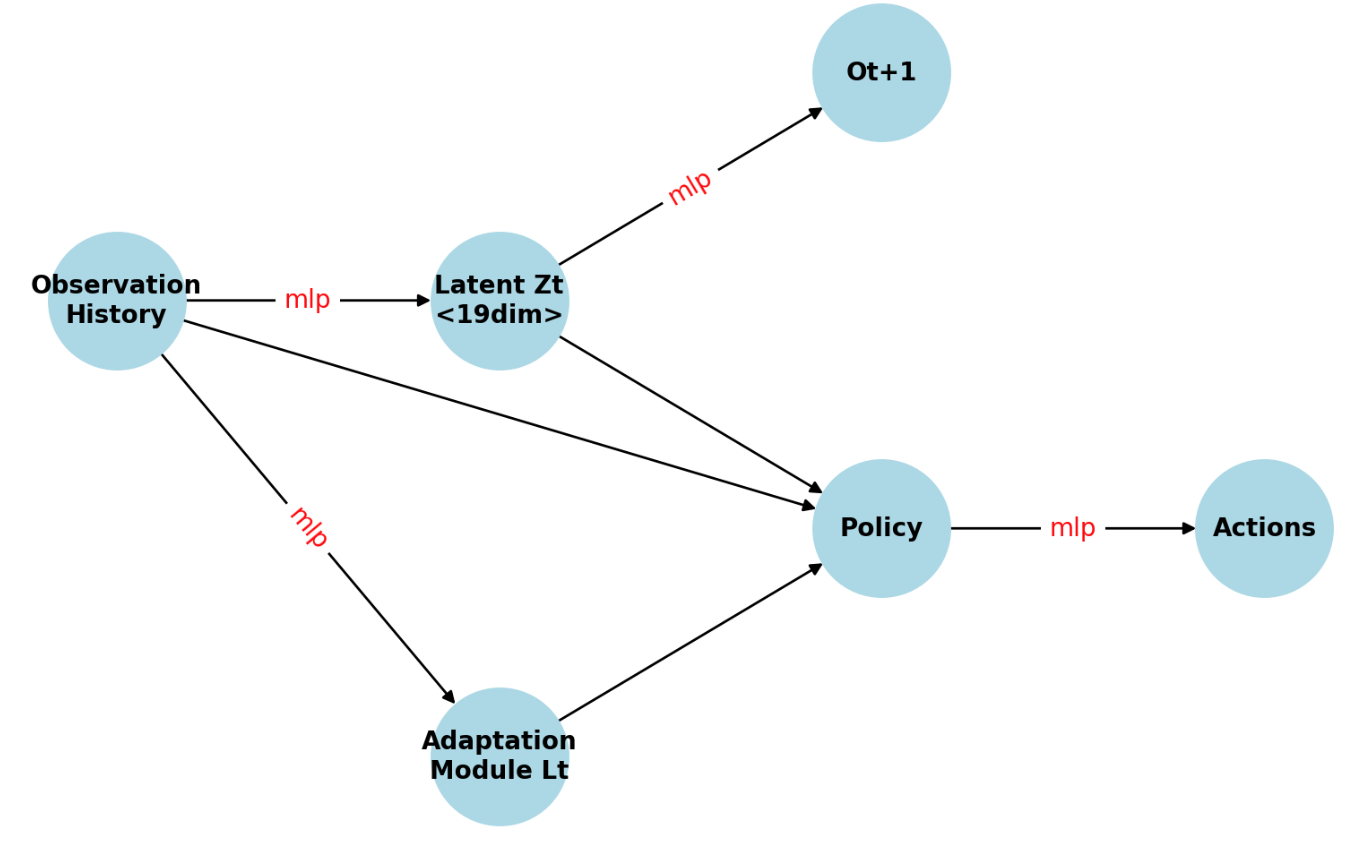

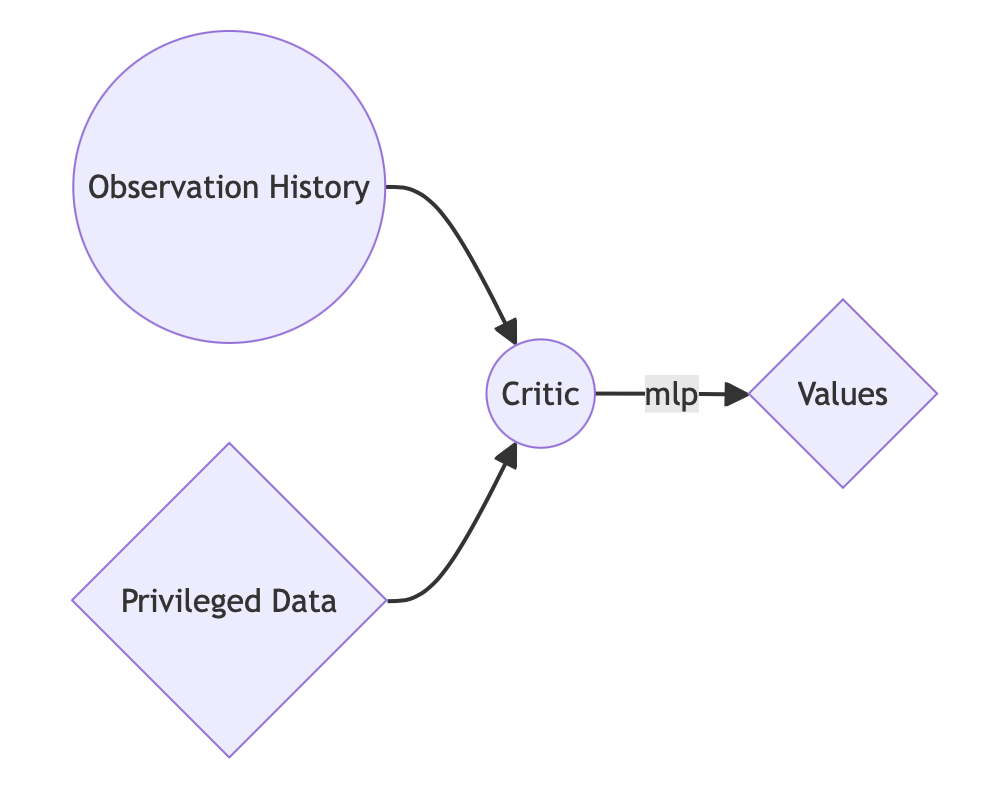

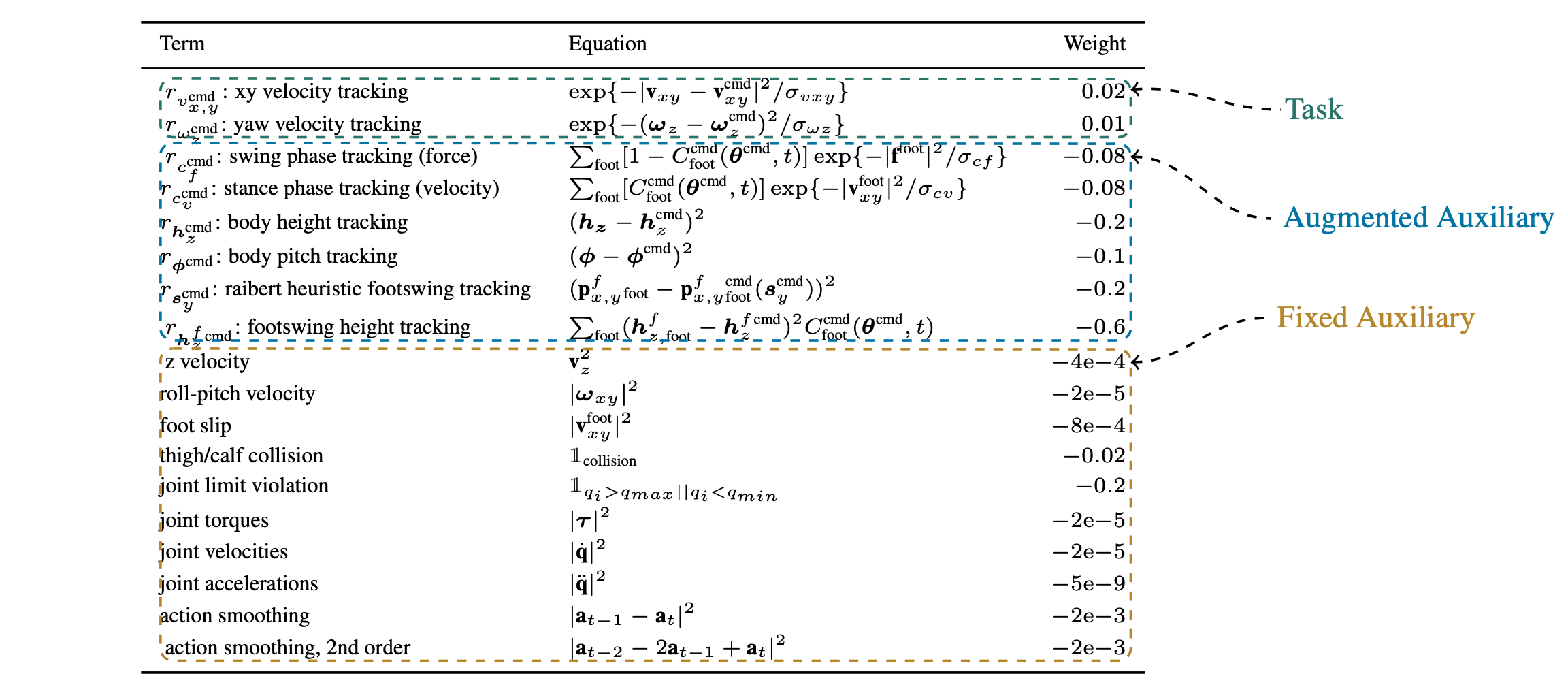

We utilize Isaac Gym simulation for training our RL policies. We leverage the Asymmetric actor-critic framework wherein the actor receives partial state information (POMDP), while the critic has access to the full state, including privileged information. This setup enhances the adaptability and robustness of the learning process by simulating real-world partial observability scenarios. We propose an Asymmetric reward architecture wherein robots navigating uneven terrain receive lesser coefficients of negative auxiliary rewards compared to those on flat surfaces. In our experiments, we dedicate x% of the total terrain area as flat surface and (1-x) % for uneven surfaces composed of stairs and slopes. x % of the total robots are initialised on the flat surface and receive full scales of auxiliary rewards while the rest of the robots are initialised on uneven ground and receive a reduced scale of auxiliary rewards. This adaptation, based on the Isaac Gym environment, optimizes locomotion strategies by balancing risk and performance across different terrains.

By implementing asymmetric rewards, our approach differentiates between robots navigating flat surfaces and those traversing uneven terrain. On flat surfaces, the emphasis is placed on learning and accurately tracking behavioral commands. Conversely, for robots navigating uneven terrain, the priority shifts to effectively tracking velocity and successfully traversing the challenging landscape, with less strict adherence to auxiliary commands.

Additionally, we integrate Control Barrier Function-based rewards to imbue our controller with less aggressive and more energy-efficient locomotion.

Looking ahead, we envision leveraging Diffusion models to further enhance our approach.

A detailed report of this work is available under “Publications and Technical Reports” subsection of this webpage.